In today’s modern era, where data plays a pivotal role in driving businesses and decision-making processes, selecting the appropriate technology stack for data science projects has become a paramount consideration.

The technology stack chosen for a data science project can profoundly impact its efficiency, scalability, and overall performance. However, the abundance of options available in terms of tools, libraries, and frameworks can make the selection process overwhelming and complex.

To ensure a well-informed decision, it is imperative to take into account several key factors when determining the ideal technology stack for data science endeavors.

Selecting Technology Stack for Data Science Projects

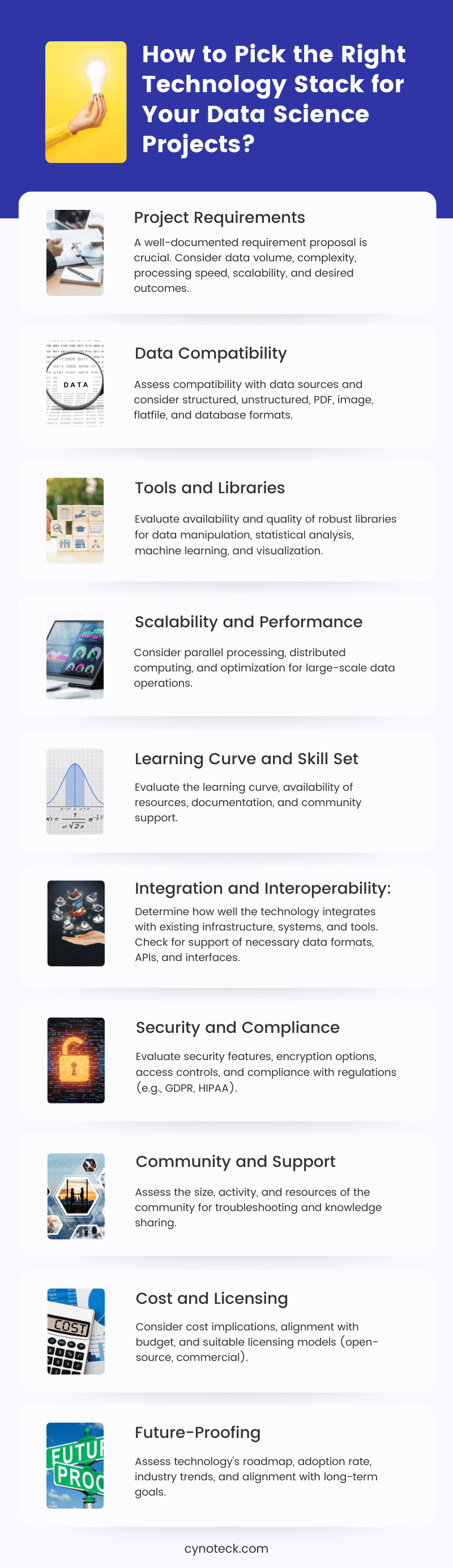

When selecting a technology for data science projects, there are several key factors to consider. Here is high level list of some of these points:

1. Project requirements:

One of the most important and often underrated factors. A well documented requirement proposal goes a long way in taking the project to its successful completion. One the other hand a half-cooked and miscommunicated requirements lead to unnecessary friction during the project life cycle.

Consider factors such as data volume, complexity, processing speed, scalability and the desired outcomes. Some technologies might have too features that you might not need while some might be missing key features.

For example: you can handle small size data in a csv file, for bigger ones you might need a full blown data base and for still bigger something like an HDFS. So aligning your requirements with the technology’s capabilities is crucial.

2. Data compatibility:

Assess the compatibility of the technology with your data sources. Consider the types and formats of data you’ll be working with, as well as any data integration or preprocessing requirements.

Ensure that the technology you choose can handle your data effectively. Some key questions to ask could be: is the data structure or unstructured? Is the data in PDF, Image, Flatfile, Database etc ?

Also, read: Selecting Tech Stack to Use for Your Business Solutions – Top Selection Criteria

3. Tools and libraries:

Evaluate the availability and quality of tools and libraries that are compatible with the technology. Look for robust libraries for data manipulation, statistical analysis, machine learning, and visualization.

A rich ecosystem of tools and libraries can significantly streamline your data science workflow. Fancy investing a hefty amount on some software and realizing some missing capabilities during project implementation.

4. Scalability and performance:

Consider the scalability and performance characteristics of the technology. Determine whether it can handle the volume and velocity of data you expect to process.

Assess factors such as parallel processing capabilities, distributed computing options, and the ability to optimize performance for large-scale data operations.

A very good example is when we try to build some solution say in excel spreadsheet and realize later that the rows of data are too much to be handled or the file becomes too slow for such large data size.

Also, read: Sales Performance Management with Cutting-Edge Technology

5. Learning curve and skill set:

Evaluate the learning curve and the skill set required to work with the technology. Consider the availability of resources, documentation, and community support for learning and troubleshooting.

Assess whether your team possesses the necessary skills or if you are willing to invest in training or hiring individuals with expertise in the chosen technology.

6. Integration and interoperability:

Determine how well the technology integrates with your existing infrastructure, systems, and tools. Consider whether it supports the necessary data formats, APIs, and interfaces required for smooth data integration and collaboration with other components of your data pipeline or business processes.

7. Security and compliance:

Evaluate the security features and compliance capabilities of the technology. Data science projects often involve sensitive data, so it’s essential to ensure that the technology provides adequate security measures, encryption options, access controls, and compliance with relevant regulations (e.g., GDPR, HIPAA).

Also, read: Salesforce Report Types for Best Data Visualization

8. Community and support:

Assess the size and activity of the community around the technology. A vibrant community can provide valuable resources, forums, and support for troubleshooting and knowledge sharing. It also indicates the technology’s popularity and longevity.

9. Cost and licensing:

Consider the cost implications of the technology. Evaluate whether it aligns with your budget and offers a suitable licensing model, such as open-source or commercial.

Account for any additional costs associated with training, maintenance, support, or scaling the technology in the long run.

While doing the time-effort estimation (and hence the project cost) this becomes a key factor. Some solutions are plug-and-play but come with a recurring licensing cost (ie a monthly or annual fee).

On the other hand building things from scratch have the advantage of customization but then development costs might go off the roof. A right mix of technology and software development is what one should aim for.

10. Future-proofing:

Finally, assess the technology’s roadmap and future development plans. Consider its adoption rate, industry trends, and whether it aligns with your long-term goals and the evolving landscape of data science. Choose a technology that is likely to remain relevant and supported in the coming years.

By considering these factors, you can make an informed decision when selecting a technology for your data science projects, maximizing your chances of success and efficiency.

Also, read: 5 Ways how data visualization has helped our clients make more money

Conclusion:

The significance of selecting the right technology stack for data science projects cannot be overstated, as it directly influences the success and efficiency of such endeavors.

By meticulously evaluating factors such as project requirements, data compatibility, tools and libraries, scalability and performance, learning curve and skill set, integration and interoperability, security and compliance, community and support, cost and licensing, and future-proofing, one can make a judicious decision that aligns with their specific needs and long-term goals.

When it comes to picking a right technology stack, it is important to understand that there is no one-size-fits-all answer; rather, a careful examination of many factors is required.

Organizations and professionals can increase their chances of success, streamline their data science operations, and stay ahead of the fast-evolving area of data science by doing so.

Harness the power of Data Science

Unlock hidden opportunities, optimize processes, and make smarter decisions. Contact us today to discover how our Data Science services can propel your organization forward. Don't let your data go untapped. Take action now and revolutionize your business with Data Science.