The purpose of developing a machine learning model is to resolve a problem, and any machine learning model can simply do this when it is in production and is actively used by its customers. So, model deployment is an important aspect involved in model building.

There are several approaches for setting models into productions, with different advantages, depending on the particular use case. Most data scientists believe that model deployment is a software engineering assignment and should be managed by software engineers as all the required skills are more firmly aligned with their day-to-day work.

Tools such as Kubeflow, TFX, etc. can explain the complete process of model deployment, and data scientists should instantly learn and use them. Utilizing tools such as Dataflow further allows data scientists to work much closer with engineering teams as it’s possible to set up staging environments in which parts of a data pipeline can be tested before deployment.

What is Model Deployment?

Deployment is defined as a process through which you integrate a machine learning model into an existing production environment to obtain effective business decisions based on data. It is one of the last steps in the machine learning life cycle.

Creating a model is usually not the end of the project. Depending on the requirements, this deployment phase can be as easy as creating a report or as complicated as implementing any repeatable data science process.

Consider an example of a credit card, a credit card company may require using a trained model or set of models (example, meta-learner, neural networks) to instantly recognize transactions, having a high chance of being false and fraudulent.

But even if the analyst will not carry out the deployment work the consumer needs to understand upfront what actions will be required to make use of these designed models.

Also, read: What is BERT? BERT for Text Classification

Essential Steps in Model Deployment:

Here are some basic steps and ideas for a deployment path, to take to get your model ready for deployment. You should keep in mind these steps, as your model is transformed from research and development to production.

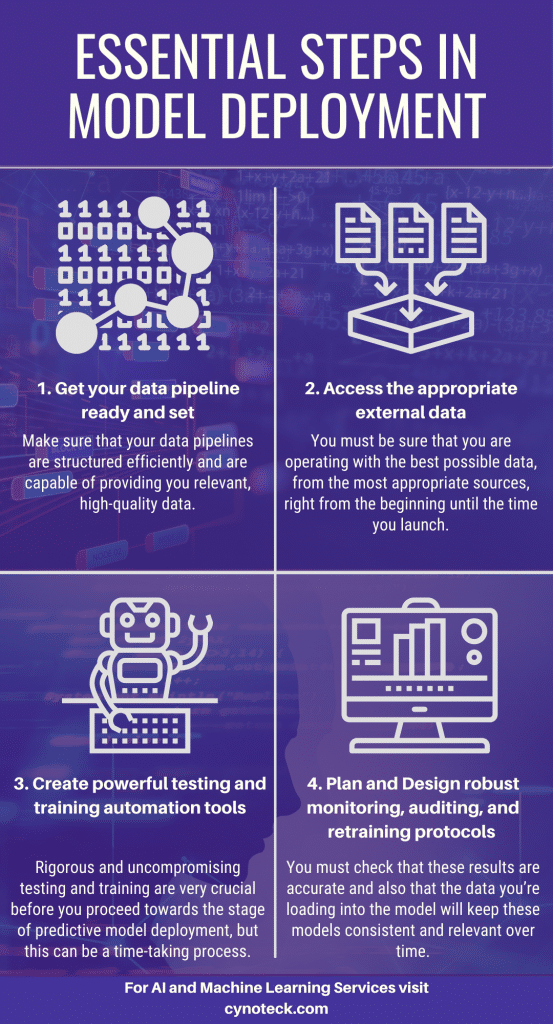

Step 1) Get your data pipeline ready and set

Now before reaching the predictive model deployment stage, you must make sure that your data pipelines are structured efficiently and are capable of providing you relevant, high-quality data.

The most important point here is that what happens when you shift from the proof-of-concept (POC) step, where you usually use a comparatively small data sample, to the production step, where larger amounts of data, are extracted from a large variety of datasets.

It’s essential to figure out how you will scale your data pipelines and your models once deployed.

Step 2) Access the appropriate external data

When you are developing a predictive model for production, you must be sure that you are operating with the best possible data, from the most appropriate sources, right from the beginning until the time you launch. If it’s already old and spoiled, then there is a chance that your carefully crafted models will not be of much use.

Now another component of this challenge is getting a grip of adequate historical data to obtain a full picture. Some businesses collect all the data that they need from within. For complete context and perspective, you should start including external data sources.

Examples of external datasets incorporate geospatial data, company data, people data like spending activity or internet behavior, including time-based data, which covers everything from climate patterns to economic trends.

Also, read: 10 Powerful AI Chatbot Development Frameworks

Step 3) Create powerful testing and training automation tools

Rigorous and uncompromising testing and training are very crucial before you proceed towards the stage of predictive model deployment, but this can be a time-taking process. So, to avoid slowing down, you must automate as much as you can.

This doesn’t indicate just working on a few time-saving tricks or tools. But your goal should be to produce models that can eventually work without any effort or action required on your part.

With the best technology, you can automate everything right from data collection and feature engineering to training and growing your models. This also helps you in making your models completely scalable without multiplying your workload.

Step 4) Plan and Design robust monitoring, auditing, and retraining protocols

Before you deploy and use your predictive model, you need to understand whether it is actually delivering the kind of results that you were looking for. You must check that these results are accurate and also that the data you’re loading into the model will keep these models consistent and relevant over time. Also, weak old data can create model drift, leading to inaccurate outcomes.

This implies you must create training processes and pipelines that draw in new data, monitor your internal data sources, and inform you which features are still providing you important insights.

You must never get complacent regarding this, or your models may be influencing your business in unhelpful directions. It’s essential to hold processes in position for monitoring your results, assuring you’re not just setting in more and more of the wrong kind of data inside your predictive model.

You should also perform AB testing to find out the performance of these models over different versions.

Also, read: Artificial Intelligence in Healthcare: The best way to beat the competition in 2021

Final Words: Streamlining the process

Models are usually trained with data, and the predictive ability is attached to the quality of data. As time passes and the environment changes throughout the model, its accuracy begins to fall conclusively.

This event is named “drift,” and it can be controlled and even utilized as a trigger to retrain the model. Data should also be monitored and matched to the data of the past.

To know and understand the analytical properties of the data changed? Also, if there are there any different or added outliers than before?

So, the key is to automate and streamline the process wherever you can, decreasing deployment time- and assuring that you’re constantly utilizing the most recent, relevant, and quality data.

AI Consulting Services

Planning to Leverage AI for your business? We provide AI Consulting to help organization implement this technology. Connect with our team to learn more.